You are here: Foswiki>DataServices Web>WebHome (02 Aug 2007, MarcoMambelli)Edit Attach

Tier2 Data Services |

Overview

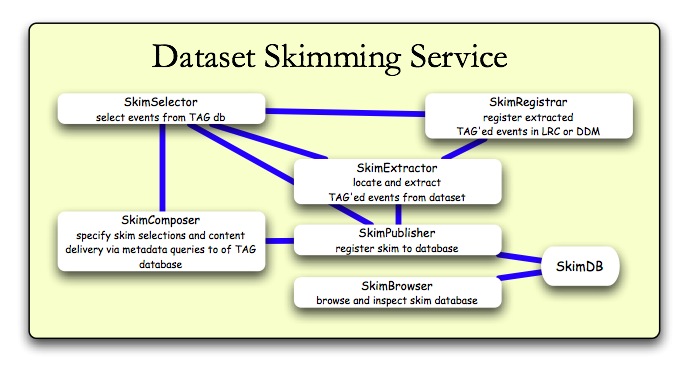

A number of Tier2-centric data services need to be operational in order to efficiently use the cluster, network, and storage resources of a Tier2 center, and to provide optimal access to (primarily) AOD, ESD and TAG datasets for ATLAS physcists. Initial focus is on development of a Data Skimming Service which uses metadata and TAG database queries to efficiently process Tier2-based AOD processing tasks.

Description

Deliver a service to provide simple and efficient access to, and event extraction from, datasets locally resident at Tier2 centers. Considerations:- A Tier2 center will have a full replica of all ATLAS AOD datasets, according to the Computing TDR.

- Focus on the most common use cases for data skimming

- Do these simple, standard tasks more efficiently and easily than what a physicist would need to do himself.

- Articulate these use cases. For example:

- Tell me what datasets are available at the Tier2 (eg. using DDM browser). Add analysis checks:

- I the dataset complete?

- Are they accessible - fileservers working?

- Is the catalog contents consisent with disk?

- Tell me the content and format (containers, POOL format, etc).

- Give me all events subject to (

cut set 1, cut set 2), saving only the (jets, electrons) objects in the output file. - Put those files into the Tier2

output buffer, easily accessible to local, Tier3, etc. - Let me know when its complete, and if there were any errors. Also, how long I have to get them before the space is reclaimed.

- Tell me what datasets are available at the Tier2 (eg. using DDM browser). Add analysis checks:

- Provide this initially as a local service

- Focus on simplicity of design and operation.

- Avoid grid services, at least initially, but use various backends if available (eg. Pathena for distributed skimming).

- Avoid remote, centralized services, systems and catalogs where possible.

Software Components

- SkimUtil

- SkimComposer

- SkimSelector

- SkimExtractor

- SkimRegistrar

- SkimPublisher

- SkimBrowser

- DssPrototype

Tier2 infrastructure and services

- Setup of a data services machine (

tier2-06.uchicago.edu) - DatasetsUCdCache - Notes about setting up datasets at UC dCache (UC_VOB)

- DQ2Subscriptions - how to subscribe to a dataset

- Pathena Tests - backend for distributed skimming

- TagDBMysql - database schema testing

- TagDBView - Looking at the data in the TAG DB

- TagQueryEngine - initial ideas

- SkimTest061212 - automated Skim test and result validation

- TagNavigatorToolOsg - Using the TAG Navigator Tool in an OSG environment

- UsingDQ2AtUC - How to set up and use DQ2 User Tools

Project plan

The project fits into the US ATLAS project plan in the Data Services section of the Facilities plan (WBS 2.3.4).Meeting notes and action items

- NotesMay31

- NotesAug1

- NotesAug8

- NotesAug15

- NotesOct3

- NotesOct10

- NotesOct17

- NotesNov1

- NotesNov7

- NotesNov14

- DataServiceToDo (last updated:8/7/06)

- NotesDec5

- NotesDec19

- MeetingNotes070411

- MeetingNotes070418

- NotesMay2

- MeetingNotes070516

- MeetingNotes070802

Tier2 Database Infrastructure

What are the Tier2 activities associated with providing the appropriate ATLAS database infrastructure necessary for Monte Carlo production and AOD analysis?Discussions from the Tier2 Workshop

General agreement that it was necessary to provide Tier2 centers with a recipe for building/providing the necessary database infrastructure, including Mysql database services, Squid caches, etc.- Meeting website: http://www.usatlas.bnl.gov/twiki/bin/view/Admins/Tier2Workshop0606

- Storage and database services program of work, here.

Tag and Event Store

Conditions and Tag databases

Action items:- Test database services for Condition and Tag databases

- Start with the current model for deployment and access

- Develop requirements based on use cases

- Provide a deployment recipe (Pacman-based) and documentation.

Calibration and Alignment Challenge

Check the readiness for the Calibration Alignment challenge (CAC will be this fall, after Release 13, at the end of September). This must be done for all Tier2 centers, and should use the results of prototyping activities.- Provide a database service (one machine with DB server hosting Tag and Conditions DB - will be finalized by prototyping):

- Tag: probably a single dedicated mysql server.

- Conditions: probably file based database like SQLlite, but may be mysql.

- Access to these services will be local, from within the Tier2 site.

- Have at least one static replica of the whatever Calibration and Conditions are needed for the challenge (less than 1 TB).

- Be prepared to have one dedicated server with a 1 TB of space.

Related Links

Other groups working to similar projects:- Glasgow: Caitriana Nicholson and Helen Mc Glone: working on the ATLAS tag database, and in particular at the moment looking at how to integrate the tag database with the Distributed Data Management (DDM) system, DQ2.

- Tag Navigator Tool Wiki

- http://ppewww.ph.gla.ac.uk/~caitrian/tag/

- Installation of site services at Glasgow from Caitriana.

- Information about POOL collection utilities from Caitriana

- The GridPP metadata wiki, hosted at Glasgow, is at http://www.physics.gla.ac.uk/metadata/index.php/. There is a page on EventLevelMetadata which we can use. To ask for an account, email Paul Millar (p.millar@physics.gla.ac.uk).

- You can see the DQ2 catalogue schemas at http://ppewww.ph.gla.ac.uk/~caitrian/tag/dq2_0_2_5_schema.html. I did it for DQ2 0.2.5 but as far as I can see it has not changed since then. They are planning to change the content catalogue schema round about September time. The schema for the Rome tags in the MySQL database is attached (here).

- CERN: the Database Deployment and Operations activity consists in the development and deployment (in collaboration with the WLCG 3D project) of the tools that allow the worldwide distribution and installation of databases and related datasets, as well as the actual operation of this system on ATLAS multi-grid infrastructure.

- Tag Content For more details see the final report attached at the end.

- Access to Atlas CVS using SSH or kerberos (if you can get it to work)

DataServices Web Utilities

-- MarcoMambelli - 25 May 2006 -- RobGardner - 07 Aug 2006| I | Attachment | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| |

dss-v1.jpg | manage | 61 K | 06 Nov 2006 - 16:20 | RobGardner | dataset skimming service, v1 |

| |

dss-v2.jpg | manage | 66 K | 15 Nov 2006 - 21:49 | RobGardner | v2 |

| |

robots.txt | manage | 26 bytes | 31 May 2007 - 19:23 | UnknownUser |

Edit | Attach | Print version | History: r49 < r48 < r47 < r46 | Backlinks | View wiki text | Edit wiki text | More topic actions

Topic revision: r49 - 02 Aug 2007, MarcoMambelli

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding Foswiki? Send feedback