You are here: Foswiki>ITB Web>UltraLightKernel (19 Jan 2012, NathanYehle)Edit Attach

UltraLightKernel

Jan 19 2012 UltraLightKernel 3.2.1 Released

Nov 1 2011 UltraLightKernel 3.1-rc4 Released

Built to get NFSv4.1 on Scientific Linux 5.5 working for use with DCache.- http://uct2-grid1.uchicago.edu/repo/MWT2/kernel-3.1.0-rc4UL1.el5.x86_64.rpm by NathanYehle (tested in ATLAS production)

- http://uct2-grid1.uchicago.edu/repo/MWT2/kernel-firmware-3.1.0-rc4UL1.el5.x86_64.rpm

- http://uct2-grid1.uchicago.edu/repo/MWT2/kernel-3.1.0-rc4UL1.el5.src.rpm

Oct 7 2011 UltraLightKernel 3.0.4 Released

This kernel has CONFIG_PREEMPT_NONE=y enabled by default, also newer mptsas and myrcom drivers. Based on Shawn McKee's 2.6.38-UL5.- http://uct2-grid1.uchicago.edu/repo/MWT2/kernel-3.0.4-UL1.el5.x86_64.rpm by NathanYehle (untested in ATLAS production)

- http://uct2-grid1.uchicago.edu/repo/MWT2/kernel-firmware-3.0.4-UL1.el5.x86_64.rpm

- http://uct2-grid1.uchicago.edu/repo/MWT2/kernel-3.0.4-UL1.el5.src.rpm

Background

Ultra Light Kernel- Originally built by The Network Work Group.

- MWT2 and the ITB have expanded upon the Ultralight team's work to create a kernel optimized for use with XRootD and Dcache.

- There have been several modifications made to the stock kernel config options towards this end.

- The main one is this kernel config change from the stock SL kernel configuration:

CONFIG_PREEMPT_NONE=y Select this option if you are building a kernel for a server or scientific/computation system, or if you want to maximize the raw processing power of the kernel, irrespective of scheduling latencies.

Testing

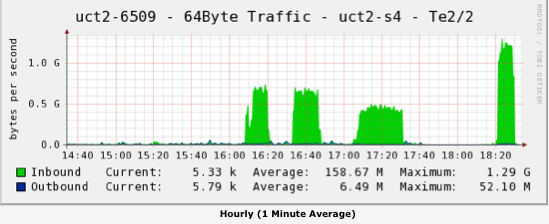

The modifications are successful at doubling the speed of XRootD at MWT2 as shown by this benchmark: "The three peaks are two dccp throughput tests followed by an xrdcp test both on the stock kernel, and then an xrdcp test on the UL5 kernel. The subsequent dccp test on UL5 also showed the same 1.2GB/s throughput, so it's clear to me that the kernel tunings make a significant difference." -Aaron

More about the tests by Aaron:

"The three peaks are two dccp throughput tests followed by an xrdcp test both on the stock kernel, and then an xrdcp test on the UL5 kernel. The subsequent dccp test on UL5 also showed the same 1.2GB/s throughput, so it's clear to me that the kernel tunings make a significant difference." -Aaron

More about the tests by Aaron: - We had 6 file, each of size 1GB. These files were placed one per tray on each of 6 trays of 15 2-TB disks in RAID-6. The data server in question has 24GB of RAM, and a 10Gb ethernet link. The test was performed from 100 worker nodes, which all attempted to read all six files in parallel. As a note, it's unlikely that this was bottlenecked or affected by the RAID cards because it's pretty clear that all 6 files would easily fit in the kernel page cache. So this is mostly a test of page-cache->xrootd->NIC throughput rather than RAID throughput.

SL 5x RPMS

- 2.6.36-UL4 original collaboration with MWT2, ITB and Ultralight, in production use at MWT2

- 2.6.36-UL5 ITB release of UL5 by SuchandraThapa (adds AFS and virtio features for kvm), in production use at MWT2

- 2.6.38-UL1 ITB release of 2.6.38 UL1 (last stable release, no afs support) by NathanYehle

- 2.6.39-UL1 by NathanYehle (has problems with hanging pnfs mounts on WAN links, LAN links pnfs mounts work in production)

SRPMs

- http://uct2-grid1.uchicago.edu/repo/MWT2/kernel-2.6.36-UL5.src.rpm

- http://uct2-grid1.uchicago.edu/repo/MWT2/kernel-2.6.38-UL1.src.rpm

- http://uct2-grid1.uchicago.edu/repo/MWT2/kernel-2.6.39-UL1.src.rpm

Driver Matrix

| Kernel | notes | megaraid_sas | mpt2sas | ixgbe | myri10ge |

| 2.6.36-UL5 | 00.00.04.17.1-rc1 | 06.100.00.00 | 2.1.4-NAPI | 1.5.1 | |

| 3.2.1-UL1 | 00.00.06.12-rc1 | 10.100.00.00 | 3.6.7-k | 1.5.3-1.534 |

References

| I | Attachment | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| |

dccpx2+xroot+xroot_UL5.png | manage | 66 K | 28 Mar 2011 - 18:54 | UnknownUser | dccpx2 xroot xroot UL5 tests |

Edit | Attach | Print version | History: r18 < r17 < r16 < r15 | Backlinks | View wiki text | Edit wiki text | More topic actions

Topic revision: r17 - 19 Jan 2012, NathanYehle

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding Foswiki? Send feedback